The Token Tax: Why Your System Prompt is a Latency Leak

During the dot-com boom of the late 90s, the public discourse was fixated on valuations and “eyeballs.” The actual technical reality, however, was being written in fiber-optic cable and BGP tables. When the bubble burst, the internet didn’t disappear; it simply stopped being a novelty and became the infrastructure.

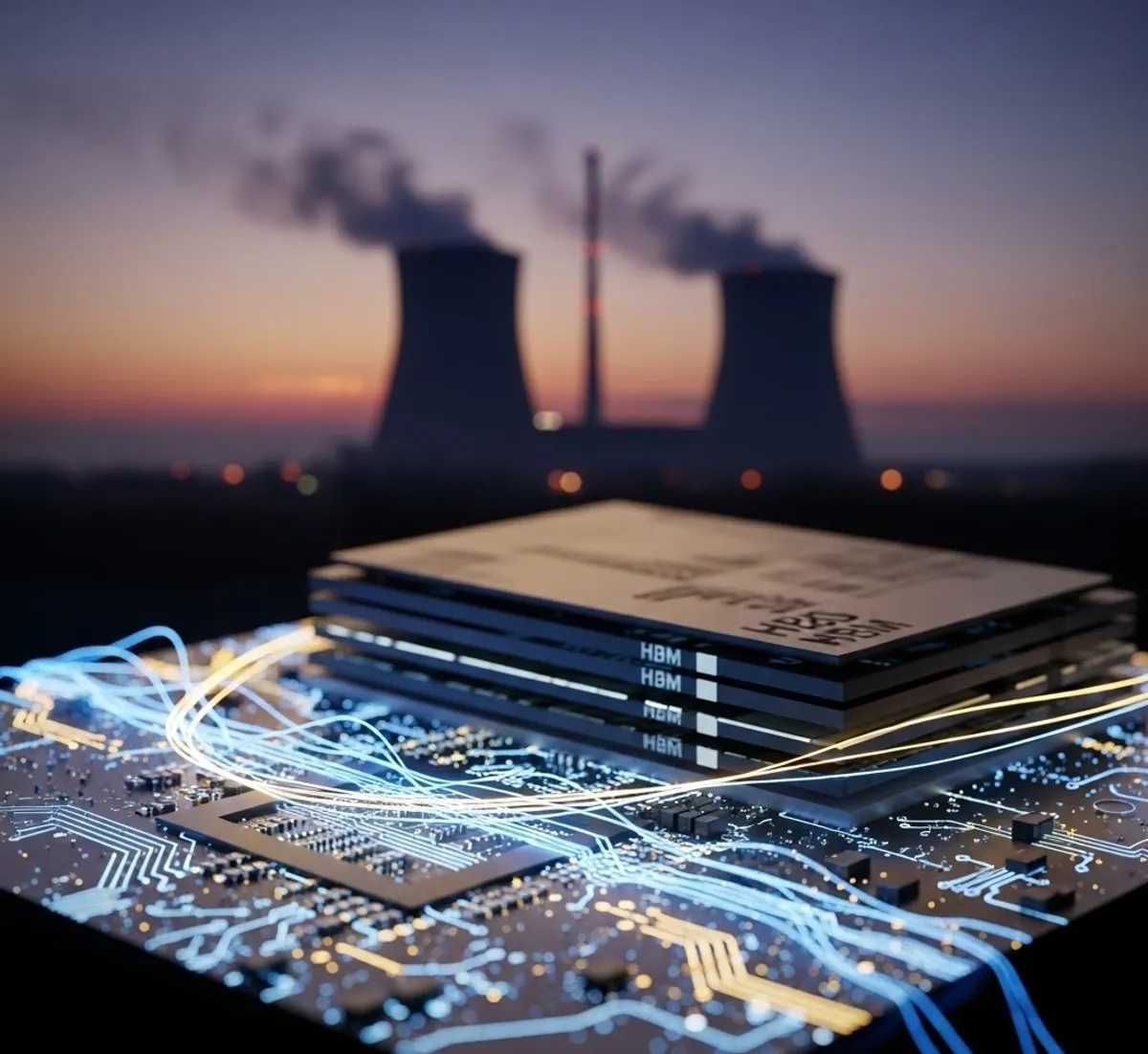

Today, we are surrounded by noise regarding “AI bubbles,” but builders are hitting a much more tangible wall: the physics of silicon and the scarcity of High Bandwidth Memory (HBM). Whether the current valuation hype deflates or not is secondary to the fact that we have fundamentally re-architected global foundries to prioritize model inference over personal computing.

The Wafer War: The Death of Priority

If you’ve tracked the pricing of high-end RAM or flagship GPUs recently, you’re witnessing a zero-sum game at the foundry level. Major manufacturers are navigating a fundamental shift in wafer allocation, driven by two global titans: TSMC (the world’s logic foundry) and SK Hynix (the leader in high-performance memory). These companies are now the strategic gatekeepers of the global compute supply chain.

The CoWoS Revolution

To build a leading-edge AI accelerator (like the Nvidia Blackwell or AMD MI300), manufacturers have moved beyond traditional PCB mounting to CoWoS (Chip on Wafer on Substrate).

Unlike predecessor technologies where the GPU and memory were separate chips soldered onto a board (2D packaging), CoWoS is a 2.5D packaging method. It places the logic die (often on TSMC’s 4N or N5 nodes) and the HBM stacks on a Silicon Interposer—a microscopic bridge that allows thousands of connections at speeds exceeding 1 TB/s (TSMC Technical Documentation, 2024). If CoWoS is the high-speed rail system of the chip, traditional packaging is a country road.

The Yield Bottleneck

However, this complexity comes with a massive “scrap” rate. HBM yields are notoriously low—often 40% to 50% lower than standard DRAM (TrendForce, 2024). To build an HBM3e stack, you must vertically layer 8 or 12 individual DRAM dies using TSVs (Through Silicon Vias).

It’s a “one bad apple” problem. If a single die in a 12-high stack is defective, or if the thermal bonding fails at the final layer, the entire stack is trash. You aren’t just losing one chip; you are losing the accumulated value of 12 wafers. This is why a foundry committing a wafer to HBM is a high-stakes gamble that de-prioritizes the GDDR6X needed for gaming or the DDR5 needed for workstations.

The “Token Tax”: Your System Prompt is Technical Debt

I recently called a large, green-branded insurance provider here in Germany to update some policy data. I expected the usual “Press 1 for Sales” purgatory. Instead, I was met by an AI agent so efficient and low-latency that it got me thinking about the underlying infrastructure. That level of performance isn’t just “good AI”; it’s a sign of a highly optimized Inference Stack.

Before we talk about optimization, we have to understand the unit of measure: the Token. AI models perform math on vectors; a token is the mathematical abstraction between language and computation. Every token requires a specific amount of compute (FLOPs) and a specific amount of memory (VRAM).

The KV Cache Analogy

Think of the KV Cache like a waiter in a busy restaurant.

- TTFB (Time to First Byte): This is the delay between your request and the first hint of a response. In AI, TTFB is dictated by the Prefill phase. If your “System Prompt” is 3,000 tokens long, it’s like handing the waiter a 50-page manual on how to be a waiter before he’s allowed to take your order.

- KV Cache Pressure: As the conversation continues, the waiter has to keep every word you’ve said in his active memory. The longer you talk, the more “notebooks” (VRAM) he needs.

If a provider deploys a bloated agent, they aren’t just paying for the API call; they are locking up gigabytes of active VRAM per session. Compressing a prompt by 15% is the digital equivalent of giving the waiter a better shorthand—it increases throughput and reduces the energy wasted on re-processing static instructions.

The Bridge: From Tokens to Joules

This is where the math becomes physical. A token isn’t just a linguistic unit; it’s a thermal one.

In a high-density datacenter, generating 1,000 tokens isn’t “free.” It requires electrical work to move those vectors through the HBM-to-logic interposer. Every “prefill” of a bloated system prompt is a burst of energy consumption that generates heat. If you are processing millions of tokens a second, you are running a high-speed conversion engine that turns Baseload Power into Synthetic Reasoning.

In our previous analysis of The Sovereignty Paradox, we looked at how energy policy dictates cognitive capacity. This is the Inference Gap. If the cost per Kilowatt-hour is significantly higher in Germany due to legacy energy policies—specifically the 2011 nuclear phase-out executed under the CDU/CSU (German Federal Government Archive, 2011)—then the “cost per thought” becomes a competitive disadvantage.

While US hyperscalers are reviving nuclear sites like Three Mile Island to ensure their “Token-to-Joule” conversion remains cheap and reliable (Constellation Energy, 2024), European infrastructure is currently gated by energy volatility. You cannot run a Tier-1 AI economy if your baseload is too expensive to feed the foundries and datacenters that generate these tokens.

The Architect’s Pivot: The Efficiency Mandate

Everyone likes to make grand, sweeping statements about the AI revolution. I prefer the “back of the napkin” calculations that actually dictate whether a system is a viable piece of engineering or just an expensive hobby. To reach true Inference Density, these are the numbers we have to respect:

-

Quantization is the Default: Running models in FP16 (16-bit) for standard tasks is an engineering failure.

- The Math: A 7-billion parameter model in FP16 requires ~14GB of VRAM just to load (Standard Model VRAM Allocation, 2023). Moving to 4-bit (int4) quantization reduces that footprint to ~3.5GB—a 75% reduction in memory overhead.

- The Evidence: Research into AWQ (Activation-aware Weight Quantization) has demonstrated that 4-bit quantization retains >99% of the predictive accuracy for most reasoning tasks (MIT/Lin et al., 2023). If you aren’t quantizing, you are paying a 300% “inefficiency tax” for a 1% gain in fidelity.

-

Context Management: Stop dumping entire databases into the prompt.

- The Math: Standard Transformer architectures have a quadratic complexity $O(n^2)$ regarding context length (Vaswani et al., “Attention Is All You Need,” 2017). Doubling your prompt length doesn’t double your resource cost; it quadruples the load in the attention mechanism.

- The Evidence: Every unnecessary token in your context window is an exponential drain on your KV Cache. Use semantic search (RAG) to only inject what is necessary.

-

Edge Offloading: Not every task requires a trillion-parameter model.

- The Math: A datacenter H100 GPU has a TDP of 700W (NVIDIA Technical Specs, 2024). A modern mobile NPU performs similar intent-extraction tasks at <15W (Apple/Qualcomm Silicon Specs, 2024).

- The Evidence: By offloading “Low-Entropy” tasks (simple classification, date extraction) to the edge, you save the “Silicon-to-Energy” conversion for the hard problems where the parameter count actually matters.

The utility of LLMs will survive the hype, just as the internet survived the .com crash. But the winners will be the ones who stopped treating tokens as “free” and started treating them as the expensive infrastructure resources they actually are.

Technical References & Fact Checks

- CoWoS & 2.5D Packaging: TSMC: Advanced Packaging Technology (CoWoS-S/R/L). (2024).

- HBM Yield Challenges: TrendForce: High Bandwidth Memory (HBM) Market Outlook 2024. (2024).

- KV Cache & PagedAttention: vLLM: PagedAttention as a Memory Management Solution. (UC Berkeley, 2023).

- Quantization Benchmarks: Lin et al., “AWQ: Activation-aware Weight Quantization for LLM Compression” (MIT). (2023).

- Attention Complexity: Vaswani et al., “Attention Is All You Need” (Google Research). (2017).

- Nuclear Revival: Microsoft and Constellation Energy: Three Mile Island Restart Agreement (2024).

- Energy Policy History: German Federal Government (Bundesregierung): History of the 2011 Atomausstieg. (2011).